This post kicks off a multi-part series that distills an AWS talk I rate highly: “Network inspection design patterns that scale.” I’ll set the foundation here, then deep-dive pattern by pattern in the next blog posts.

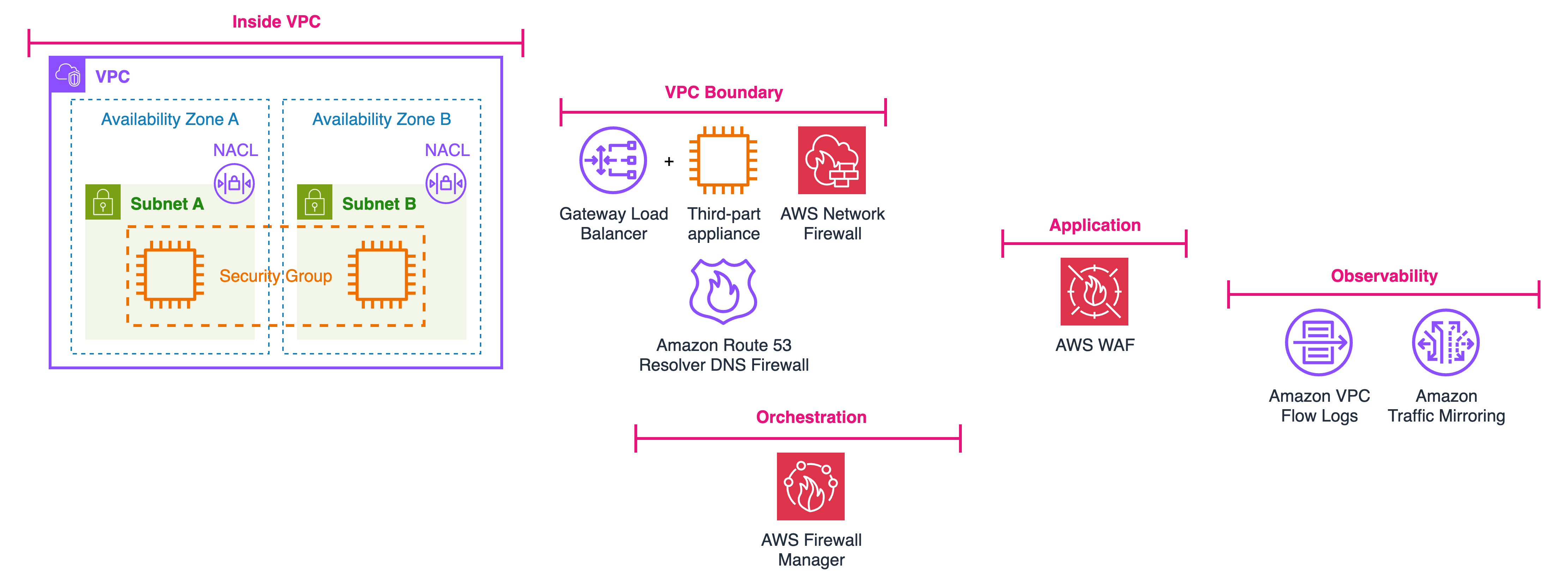

As your footprint grows, you’ll handle east-west (service-to-service) and north-south (internet or hybrid) traffic at scale. Getting consistent visibility and control across those paths is non-trivial—routing, symmetry, availability zones, and multi-account operations all influence whether packets actually pass through your controls. AWS provides native building blocks to help you do this without hand-managing appliances everywhere: AWS Network Firewall, Gateway Load Balancer (GWLB), Route 53 Resolver DNS Firewall, AWS WAF, and AWS Firewall Manager.

This series is a practical guide to the most common inspection flows and deployment models so you can choose an approach that scales operationally and financially.

In this series, “inspection” spans L3–L7 controls and visibility:

- AWS Network Firewall: managed, stateful network firewall with stateless and stateful rule engines; integrates at VPC boundaries, TGW, NAT, VPN/DX paths.

- Gateway Load Balancer (GWLB): a transparent gateway + load balancer for virtual appliances (including third-party firewalls/IDS/IPS), making scale-out and failure handling sane. You steer traffic to GWLB endpoints (GWLBE) and the service handles distribution.

- Route 53 Resolver DNS Firewall: domain-aware controls for egress DNS from your VPCs, including managed lists and advanced detections.

- AWS WAF: L7 web controls for CloudFront, ALB, API Gateway, AppSync, etc. (This sits in front of applications, not as a VPC data-path firewall.)

- AWS Firewall Manager: central policy distribution and drift control across accounts for the above services.

- Layered security: AWS’s “layered security” framing ties these pieces together; we’ll keep that lens throughout the series.

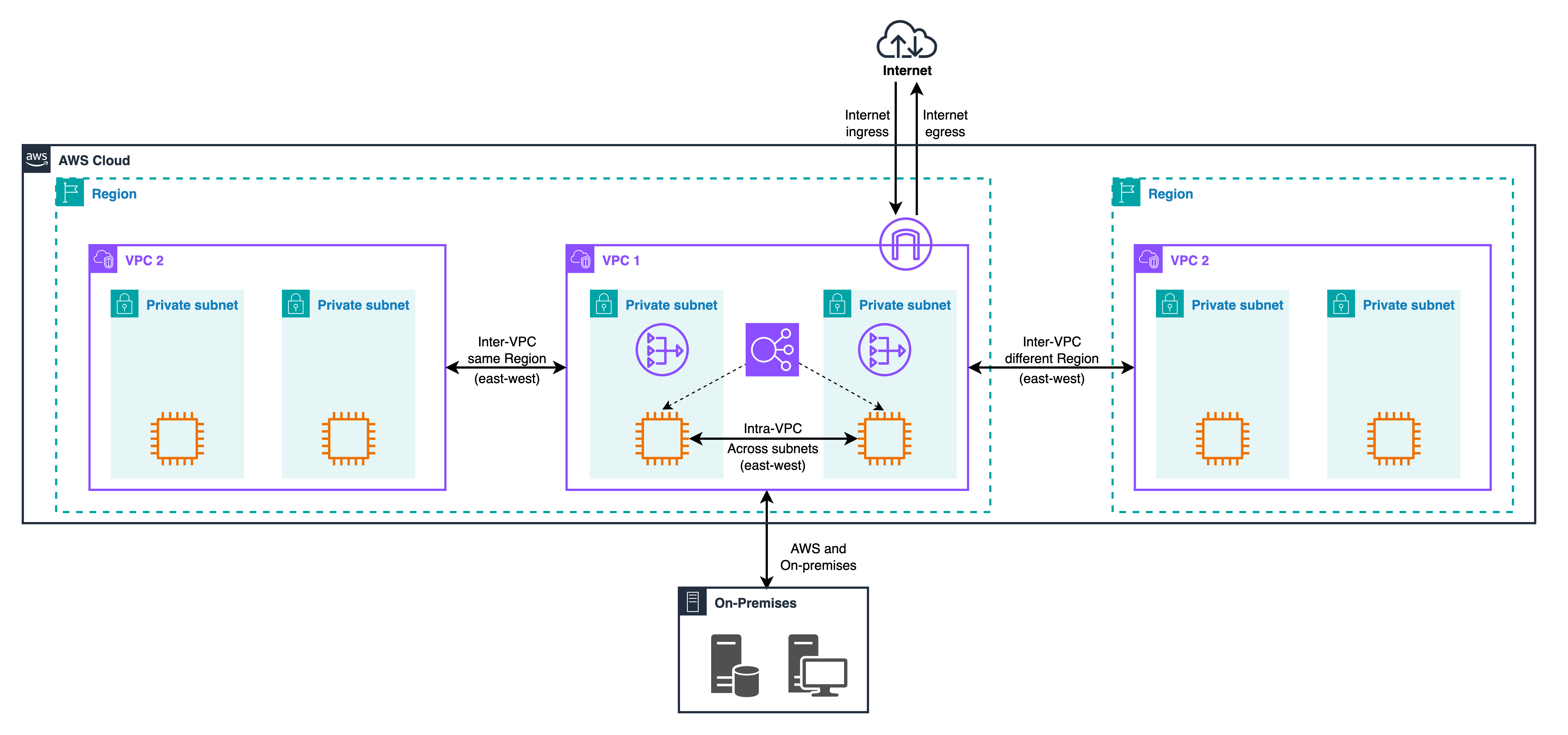

To make the rest of the series easy to navigate, I’ll frame everything around six flow categories. For each one, I’ll spell out what it is, where inspection logically sits, the routing mechanics that actually make packets hit your controls, and the most common pitfalls.

- Intra-VPC (east-west): traffic across subnets/AZs inside a VPC. Inspection sits between the communicating subnets or between the load balancer and its targets. In practice this usually means steering through a Gateway Load Balancer endpoint (GWLBE) in an “inspection” subnet or sending flows to AWS Network Firewall.

- Inter-VPC, same Region (east-west): via Transit Gateway or Cloud WAN. A central inspection VPC fronted by GWLB or Network Firewall, with spoke VPCs attached to a Transit Gateway (TGW) or Cloud WAN.

- Inter-VPC, different Region (east-west): multi-Region variants and double-inspection nuances. Inspection sits often a pair of inspection VPCs (one per Region) with TGW inter-Region peering or Cloud WAN carrying the fabric. Many teams intentionally double-inspect at Region boundaries to localize policy and blast radius.

- Hybrid (VPC ⇄ on-premises): Direct Connect/VPN, VGW ingress routing, and service-insertion paths. Either centrally in an inspection VPC or distributed per application VPC. The key is steering ingress at the gateway (VGW/DX) into GWLB/NFW before workloads see it.

- Ingress (from internet): where to place inspection relative to ALB/NLB and how it affects client IP visibility and TLS. Two classic placements with different visibility and TLS implications: Gateway routing (before the LB) steers from the internet gateway to GWLB/NFW where the appliance sees the true client IP, but requires public certificates for TLS decryption. More specific routing (between LB and targets) only shows traffic accepted by the LB and allows private certificates for decryption, reducing false positives.

- Egress (to internet): proxy/NAT vs. Network Firewall, and how “gateway routing” vs. “more specific routing” changes what the firewall actually sees.

These flows appear together on one high-level diagram in the talk and are perfect to anchor reader intuition early in the post.

Key AWS routing concepts that make (or break) inspection

Before deployment models, a few routing primitives explain why packets do, or don’t, hit your controls:

- Longest prefix match drives route priority: “more specific routes” win. This is the backbone of the VPC routing enhancement that lets you steer intra-VPC flows through a middlebox/GWLBE/Network Firewall.

- Ingress routing and gateway route tables let you steer traffic arriving via IGW/VGW to appliances/GWLB before workloads. This is central to both internet and hybrid ingress patterns.

- Symmetry matters: for stateful inspection across AZs/VPCs, Transit Gateway appliance mode keeps flows pinned to the same AZ/appliance to avoid asymmetric return paths.

A final nuance is what the firewall actually sees. With gateway routing on egress, your device may see a NAT gateway’s address rather than the workload’s, which is fine for basic allow/deny but can complicate attribution and investigation. If you need workload identity at the packet layer, insert inspection with more specific routing before NAT or pair packet inspection with DNS policy so that many risky destinations are blocked at resolution time, not after a connection attempt.

Firewall deployment models on AWS

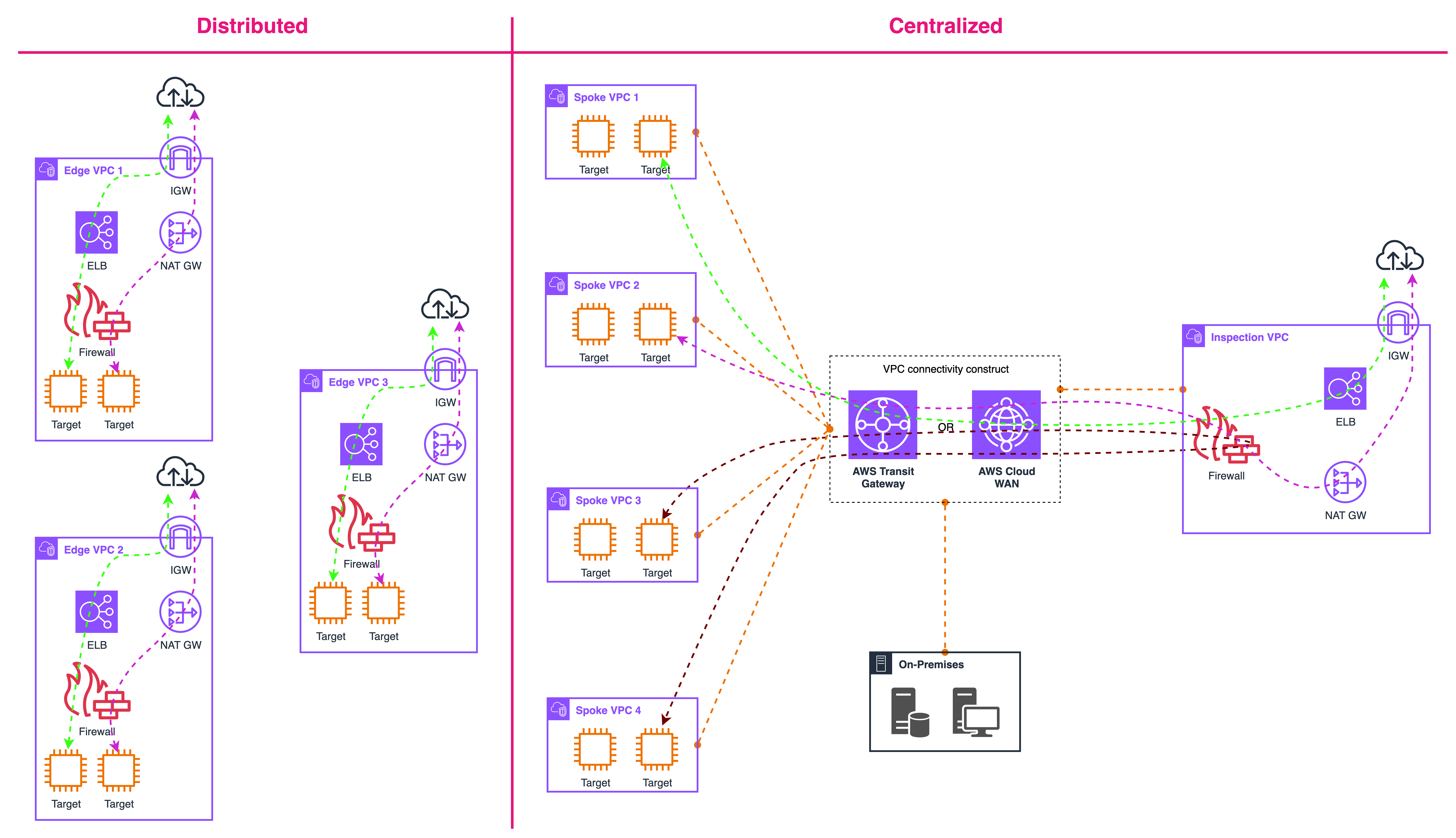

You can think of deployment models as answers to two architectural questions: where inspection lives and how traffic is steered there. The first axis is placement, either at the edge of each VPC, at a shared hub, or a mix of both. The second axis is insertion, using gateway route tables for north/south flows or more specific routing inside VPCs for east/west. A third, softer axis is the control plane: whether policy, logging, and lifecycle are operated per VPC or centralized through services such as AWS Firewall Manager and AWS Organizations.

In a distributed model, inspection sits close to workloads in every VPC, often one fleet per AZ. Thanks to VPC routing enhancements, you can cleanly interpose a Gateway Load Balancer endpoint for third-party appliances or an AWS Network Firewall endpoint for a managed data path between subnets or between a load balancer and its targets without hairpins. The data path stays short, failure domains are tight, and scale is linear: add capacity in the busy VPC or AZ and leave everything else untouched. This locality also reduces blast radius, since a misconfiguration in one environment is less likely to affect others. The trade-off is operational. You own many small edges, including route tables, rule sets, health checks, and logging sinks, so drift and inconsistency can creep in unless you standardize aggressively with infrastructure as code and push shared policy through Firewall Manager. Observability also starts out per VPC by default; plan early for log aggregation and uniform metrics so investigations do not turn into a scavenger hunt. Distributed placement fits when east/west throughput is high, tenant isolation is required, or latency budgets are tight and you do not want to send packets to a central hop only to bring them back.

A centralized model moves inspection into a dedicated inspection or security VPC and inserts that VPC on the path using AWS Transit Gateway or AWS Cloud WAN. Application VPCs attach to the hub, and traffic is steered through a GWLB or Network Firewall tier in the inspection VPC before continuing to other VPCs, the internet, or on-premises networks. The big advantage is governance: a single enforcement point, a single logging story, and one place to roll out new controls. It is also easier to run shared network services such as egress filtering, TLS proxying, and DLP once rather than everywhere. The price of that simplicity is careful engineering for throughput and symmetry. Stateful devices must see both directions of a flow. In practice that means enabling Transit Gateway appliance mode and aligning AZ affinity so return paths do not bypass inspection. You will typically place third-party devices behind Gateway Load Balancer to keep scale-out and failure handling transparent, and use Network Firewall when you want managed elasticity at VPC boundaries. Central hubs concentrate traffic, so watch aggregate limits such as attachments, bandwidth, and route scale, and model the cost of hairpins and cross-AZ data transfer. When you need to take this model global, Cloud WAN service insertion lets you steer by policy rather than rewriting every VPC’s route tables, which reduces change risk and keeps multi-Region rollouts predictable.

In practice, most large organizations land on a hybrid model that mixes both. Centralize the things that benefit from shared governance, such as inter-VPC, ingress, and egress, while keeping distributed inspection for the highest-throughput or latency-sensitive east/west paths inside busy VPCs. Think of it as hub for north/south and locality for east/west. Cloud WAN can serve as the global fabric with regional inspection VPCs, while Route 53 Resolver DNS Firewall provides a universal first line of control regardless of where packet inspection happens. Hybrid also gives you a pragmatic migration path. You can start with a central hub to eliminate blind spots quickly, then carve out distributed insertion where performance or isolation demands it.

Here are some key implementation details. In distributed designs, decide where you want observability: before a load balancer (preserves client IP but requires public certificates for TLS decryption) or between the load balancer and targets (uses private certificates, sees only accepted traffic, and reduces noise). For egress, consider whether inspection will see the NAT gateway’s address or the original source; more specific routing before NAT often simplifies incident response. In centralized designs, document the appliance mode setting, AZ affinity strategy, and health-check behavior so failover is predictable. In both models, standardize route table shapes, naming, and logging destinations, enforce policy centrally with Firewall Manager, and keep a small library of repeatable patterns you can stamp across accounts.

The diagram shows distributed edges on the left, each VPC with its own IGW or NAT and a firewall or GWLB tier sitting close to targets. The right side shows a hub and spoke: multiple spoke VPCs feeding a central connectivity construct, Transit Gateway or Cloud WAN, which then inserts an inspection VPC that contains your firewall tier, internet boundary, and any shared load balancers. If you also show an on-premises box connected to the hub, the picture captures hybrid ingress service insertion. This image works as a reference for the series. Choose distributed when you need locality and isolation, choose centralized when you want governance and shared services, and combine them when scale and reality require both.

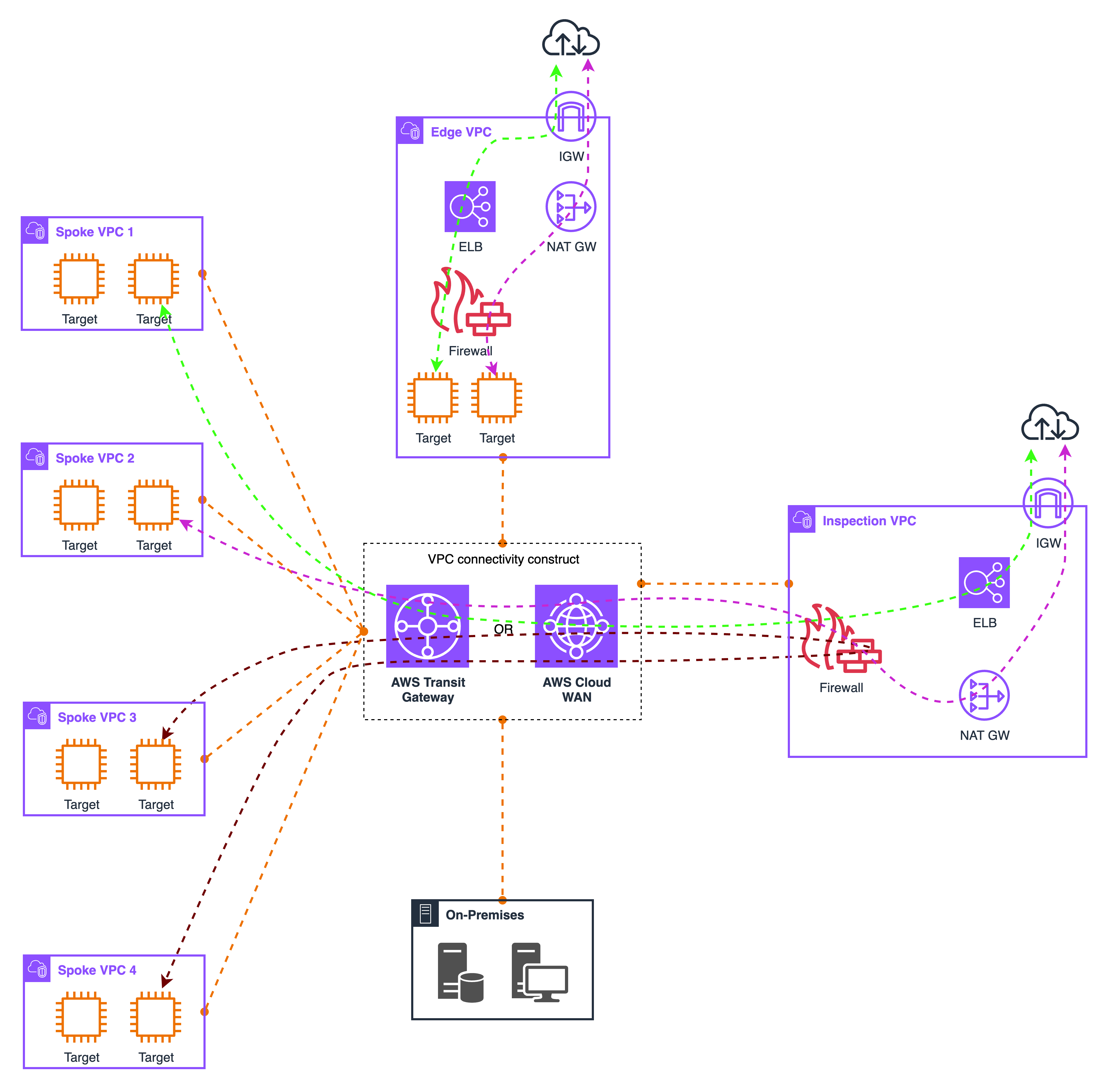

Most large organizations settle on a hybrid model because it lets them match placement to intent. Centralize the paths that benefit from shared governance and common services, then keep inspection close to the workloads for flows that are sensitive to latency or volume. In practice that means using an inspection VPC for inter-VPC traffic, internet ingress, internet egress, and hybrid connectivity to on-premises, while leaving busy east-west conversations inside application VPCs to a local insertion point. The hub gives you a single enforcement story, uniform logging, and one place to run shared capabilities such as TLS proxying, data loss prevention, and reputation filtering. The local insertion keeps packet paths short, contains blast radius inside each VPC and Availability Zone, and scales linearly with demand.

The same routing primitives power both sides of the model. Inside VPCs, more specific routes interpose a Gateway Load Balancer endpoint or an AWS Network Firewall endpoint between subnets or between a load balancer and its targets, which is ideal for high-throughput service-to-service inspection. At the boundaries, gateway route tables on the internet gateway or virtual private gateway steer north-south flows into the hub so you can observe the original client IP on ingress and consistently enforce egress policy before traffic leaves your estate. Transit Gateway or Cloud WAN provides the fabric between VPCs and Regions; use Transit Gateway appliance mode to keep stateful flows symmetric, and use Cloud WAN service insertion when you want policy to drive path selection across many accounts without touching every route table.

A hybrid design also benefits from a layered control plane. Push an organization-wide baseline through AWS Firewall Manager for Network Firewall, DNS Firewall, and WAF so that core protections are consistent everywhere, then allow scoped overrides per environment or per segment. Standardize logging and metrics into a central destination so investigations are fast regardless of where packets were inspected. Treat observability as a requirement: decide which hops will retain client IP, how TLS will be decrypted, and how to correlate logs from load balancers, firewalls, and NAT gateways. This reduces ambiguity during incidents and makes compliance reviews repeatable.

Think of migration as an incremental path rather than a big-bang change. Many teams start by standing up the central hub to eliminate blind spots quickly and to unify egress and hybrid ingress. Once that is stable, they carve out distributed insertion for the hot east-west paths that need lower latency or tighter isolation. Use infrastructure as code to stamp a small set of repeatable patterns, tag route tables and endpoints consistently, and document appliance mode and Availability Zone affinity so failover behaves predictably. Keep an eye on aggregate limits and data-transfer costs at the hub, and design for graceful degradation with health checks, fallback routes, and clear break-glass runbooks.

The hybrid diagram shows spoke VPCs attaching to Transit Gateway or Cloud WAN, with policy-driven paths into an inspection VPC. An application VPC uses local insertion for east-west traffic through a GWLB or Network Firewall endpoint, with an on-premises block connecting to the fabric. Choose central placement for governance and shared services, choose local placement for performance and containment.

How this series will unfold

This series is designed to move from foundations to practice with a steady increase in complexity. We begin with Intra-VPC east-west inspection, because understanding how to place controls between subnets (and across Availability Zones) establishes the routing fundamentals you’ll reuse everywhere else.

From there, we step into Inter-VPC inspection within a single Region using Transit Gateway and Cloud WAN. That is where symmetry, appliance insertion, and policy centralization start to matter at scale. Next, we extend the same ideas across Regions and show when and why teams perform inspection in each Region rather than trying to centralize everything in one place.

After the east-west patterns are solid, we pivot to hybrid connectivity and walk through how traffic arriving from on-premises is intercepted at the boundary before it reaches workloads. The final two parts focus on north-south: first ingress, where we compare inspection before a load balancer versus between the load balancer and its targets and explain how those choices affect client IP visibility and TLS decryption; then egress, where we contrast proxy plus NAT with Network Firewall based designs and show how to regain original source visibility with routing.

Every part will include a concise diagram, a minimal set of route tables you can reproduce, a short decision lens for choosing between options, and a checklist you can lift into your own environment. We’ll also cover key settings like Transit Gateway appliance mode, Gateway Load Balancer endpoints, and DNS Firewall so you can translate concepts into concrete configurations.

Practical nuances to watch for (from experience)

Two small routing decisions often determine whether your inspection actually sees the traffic you think it does. First, decide where you want to observe packets. If you place inspection on the gateway path for ingress, the device sees the true client IP, which is ideal for reputation and geo policies but may require handling public certificates for TLS decryption. If you place inspection between the load balancer and the targets, you only see traffic already accepted by the load balancer and you can use private certificates, which often reduces noise for application teams. On egress, if you only inspect after NAT, the firewall will primarily see the NAT gateway’s address. That is fine for coarse controls, but it complicates attribution and incident response. You can avoid this by using more specific routing to insert inspection before NAT, or by pairing packet inspection with Route 53 Resolver DNS Firewall so many risky destinations are blocked at resolution time before any TCP handshake occurs.

Symmetry is the second recurring theme. Stateful devices need the forward and return legs of a flow to pass through the same inspection hop. At small scale, you can approximate this with careful AZ-aware routing, but as soon as you connect multiple VPCs the practical way to keep paths consistent is to enable Transit Gateway appliance mode on the attachment that contains your firewall endpoints. Appliance mode pins flows to the same Availability Zone for their lifetime and removes a whole class of intermittent failures that show up as “works for some connections, fails for others.” When you’re inserting third-party appliances, place them behind Gateway Load Balancer. GWLB gives you a transparent, routable endpoint, handles health checks and failover, and lets you scale horizontally without per-appliance route surgery. If you prefer a managed experience at VPC boundaries, AWS Network Firewall offers stateful inspection and integrates cleanly with IGW, NAT, VPN, and Direct Connect paths, which reduces the amount of undifferentiated plumbing you have to maintain.

Finally, keep your control plane as simple as the data path. Gateway route tables are the right tool when you need to intercept traffic at the boundary, because you can associate policy with the internet gateway or virtual private gateway and enforce inspection before packets spread into multiple subnets. Inside the VPC, more specific routes let you interpose a middlebox between subnets without hairpins, which is what makes east-west inspection operationally viable. At organization scale, combine these routing primitives with a small number of patterns you repeat everywhere and add DNS Firewall globally so name-based policy is consistent even when packet inspection placement varies by environment. That blend keeps policy predictable and reduces the chance of silent bypasses that only appear under failure or during peak traffic.

What’s next

Next we move straight into Part 2: Intra-VPC (East-West) Traffic Inspection and turn the foundations into concrete packet paths inside a single VPC. We’ll lay out baseline topologies for same-AZ and cross-AZ flows, walk through the route tables that steer subnet-to-subnet traffic through a Gateway Load Balancer endpoint or an AWS Network Firewall endpoint, and show precisely how to keep flows symmetric so stateful inspection behaves predictably. Expect a compact diagram you can mirror in your environment, copy-pasteable route snippets, and a decision checklist that weighs latency, failure isolation, and logging/attribution trade-offs. From there the series fans out: we’ll generalize the same mechanics to inter-VPC patterns, extend across Regions, fold in hybrid paths, and close with ingress and egress placements, so by the end you’ll have a repeatable playbook for every inspection path that matters.